Technical

PaperAI - Neuroevolution-based AI for Paper.io

Hello everyone, I am back~

I’ve been really busy with SAT prep, which is primarily why I haven’t posted much the summer, sorry 😭 At any rate, I am back with my new project, PaperAI!

You have probably played the game paper.io before. Essentially, it is a multiplayer game where each player controls a blob to gradually claim more territories. When a blob encloses an area, everything within becomes part of their property, but their opponents can do the same and steal others’ lands.

Needless to say, I am pretty pro at this game. After only playing for one week, I have successfully achieved a high score of 10% 😎. However, I am of course not satisfied with this score. Being a special snowflake, I decided that actually playing the game is too much effort, and that I need to beat it without playing! Upon deliberation, I found a solution: create a bot that wins the game for me

Note that the distance is input as (1 / distance) since I wanted the value to always be between 0 and 1, and it makes sense that the difference between 1 and 2 is much more significant than 10 and 11. Moreover, this allows the model to conveniently label non-detected as 0 (1 / infinity), whereas a 0 in plain distance could be confused as being infinitely close.

Finally, the input layer has 4 extra nodes called “memory nodes,” which allows the AI to remember prior information.

So in total, the brain has 6 * 8 + 4 = 52 pieces of information for making the decision, turning either left, right, down, or up.

The genetic algorithm mutates at a rate of 5% using Gaussian distribution. The population has a fixed size of 2000 agents, and they crossover by picking a random pivot point and swapping genes at both sides.

For the fitness function, I used the equation of (# of claimed territory + 0.1 * # of trails) + [killed enemy] * 144. The # of trails is to encourage early exploration, and the final boolean [killed enemy] is to encourage the agents to attack instead of always defending.

I have trained the network for over 1000 generations, and you can either play them or let them play themselves. Use WASD to control the direction. (Try getting 100% of the squares, it might be harder than you think for some generations)

One problem that I couldn’t fully solve is persistence of the strategies. Often, a strategy would emerge to overtake the population, but as it becomes more predominant, its advantages fade and eventually become lost. As a result, although the AI did manage to come up with many effective strategies/countermeasures, only a handful of strategies are actually present for each generation. I tried to counter this by increasing the population size and hoping the gene would be saved, thus able to resurface at a later generation, but I am not sure if it was effective enough. Please comment if you have any ideas.

Thanks for reading 😊

I’ve been really busy with SAT prep, which is primarily why I haven’t posted much the summer, sorry 😭 At any rate, I am back with my new project, PaperAI!

You have probably played the game paper.io before. Essentially, it is a multiplayer game where each player controls a blob to gradually claim more territories. When a blob encloses an area, everything within becomes part of their property, but their opponents can do the same and steal others’ lands.

Needless to say, I am pretty pro at this game. After only playing for one week, I have successfully achieved a high score of 10% 😎. However, I am of course not satisfied with this score. Being a special snowflake, I decided that actually playing the game is too much effort, and that I need to beat it without playing! Upon deliberation, I found a solution: create a bot that wins the game for me

Which Model?

Naturally, the first question is “What type of AI should we use?” I decided to use genetic algorithm + neural network (aka Neuroevolution), mainly for two reasons:

- It’s very difficult to formally define a “right move”, which renders traditional methods of training neural networks, namely backward propagation, impractical. In contrast, genetic algorithm greatly mitigates this issue since it doesn’t need to assess if a move is good at each step, but only if the final state is good.

- Neural networks are versatile and can adopt many strategies just by fine-tuning the weights. It also meets the requirement of genetic algorithm: a small change in weights usually reflects a small change in performance.

What is a neural network?

As mentioned earlier, the neural network serves as the “brain” of the AI agents. They take in sensory information (input layer), process them (hidden layer), and output appropriate actions (output layer).

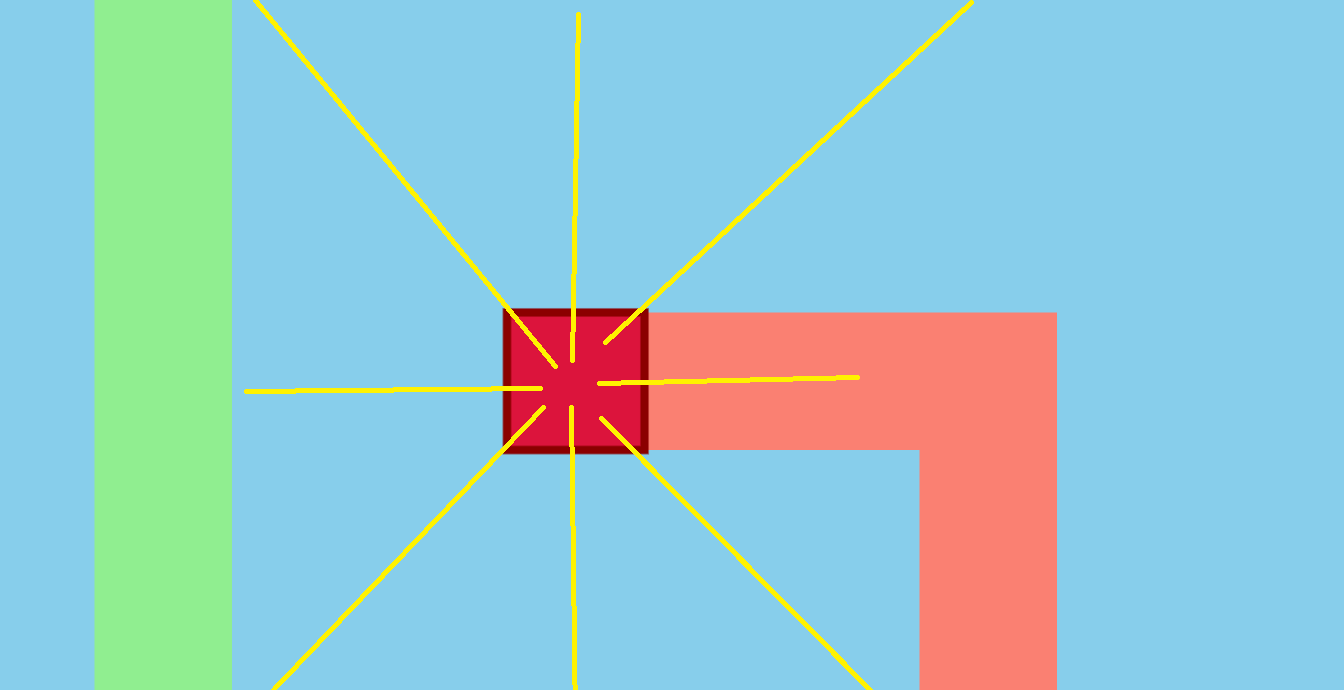

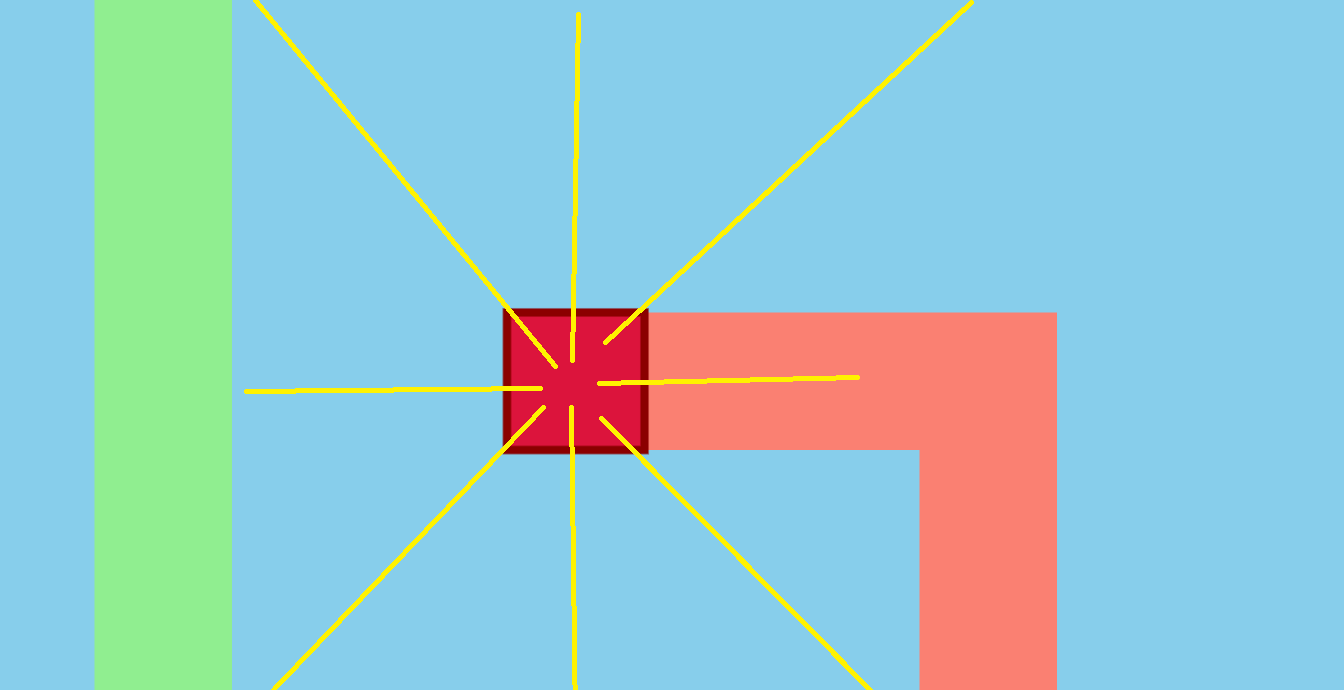

How and what do the agents “see?”

The agent sees in 8 directions and gathers information about the following 6 properties:

- Distance to the wall

- Distance to enemy’s body

- Distance to enemy’s trail

- Distance to enemy’s head

- Distance to agent’s body

- Distance to agent’s trail

Note that the distance is input as (1 / distance) since I wanted the value to always be between 0 and 1, and it makes sense that the difference between 1 and 2 is much more significant than 10 and 11. Moreover, this allows the model to conveniently label non-detected as 0 (1 / infinity), whereas a 0 in plain distance could be confused as being infinitely close.

Finally, the input layer has 4 extra nodes called “memory nodes,” which allows the AI to remember prior information.

So in total, the brain has 6 * 8 + 4 = 52 pieces of information for making the decision, turning either left, right, down, or up.

Genetic Algorithm

The genetic algorithm is the main training component. I have already written many blog posts (such as TriangleOnly) on it that you can check out. TL;DR, imagine each AI agent as an animal that can mate with other agents to produce offspring, and mutate its brain structure randomly. Then for each generation, we select the fittest animals (i.e. best at playing paper.io) and allow them to reproduce among themselves, passing down their beneficial traits. Then by the law of evolution, the population will gradually get better and better.Specifics

The neural network’s architecture is 52 -> 40 -> 20 -> 8. The first layer is for input, the second and third are hidden layers for processing, and the fourth is for outputting the action. Four of the output layer nodes decide whether to go [up, down, left, right], and the other 4 are memory nodes that return to the input layer at the next iteration.The genetic algorithm mutates at a rate of 5% using Gaussian distribution. The population has a fixed size of 2000 agents, and they crossover by picking a random pivot point and swapping genes at both sides.

For the fitness function, I used the equation of (# of claimed territory + 0.1 * # of trails) + [killed enemy] * 144. The # of trails is to encourage early exploration, and the final boolean [killed enemy] is to encourage the agents to attack instead of always defending.

Demo

Finally, the demonstration :DI have trained the network for over 1000 generations, and you can either play them or let them play themselves. Use WASD to control the direction. (Try getting 100% of the squares, it might be harder than you think for some generations)

Generation:

Conclusion

Overall, I think the project went okay. The agents developed sophisticated strategies against their opponents, albeit often only targetting a certain play style. Furthermore, I believe if trained for more generations, the strategies will become even more comprehensive. One problem that I couldn’t fully solve is persistence of the strategies. Often, a strategy would emerge to overtake the population, but as it becomes more predominant, its advantages fade and eventually become lost. As a result, although the AI did manage to come up with many effective strategies/countermeasures, only a handful of strategies are actually present for each generation. I tried to counter this by increasing the population size and hoping the gene would be saved, thus able to resurface at a later generation, but I am not sure if it was effective enough. Please comment if you have any ideas.

Future work

This project is completely open-source and can be found at https://github.com/CodeTiger927/PaperAI. Feel free to improve upon it and publish it as your own (I would frankly be honored xd). If you have any suggestions/want to see me continue this project, comment below :D. I really appreciate them.Thanks for reading 😊

CodeTiger

2021.8.29